The mapping implementation leverages the Spark JSON mapping module, which is based on Jackson Databind API. In case this is not possible, corrupt records will be handled according to one of the configurable strategies: drop them, put them in a dedicated column or make the entire task fail. In this case the connector will make its best effort to translate the data into the correct format, i.e. Since ArangoDB does not internally enforce a schema for the stored data, it could be possible that the read data does not match the target Spark SQL type required by the read schema. from the Spark Shell, nonetheless user provided schema is recommended for production use, since it allows for more detailed control. Automatic schema inference can be useful in case of interactive data exploration, i.e. The reading schema can be provided by the user or inferred automatically by the connector prefetching a sample of the target collection data. This mode is recommended for executing queries in ArangoDB that produce only a small amount of data to be transferred to Spark. When reading data from an AQL query, the job cannot be partitioned and parallelized on the Spark side. This allows partitioning the read workload reflecting the ArangoDB collection sharding and distributing the reading tasks heavenly. Each query hits only one DB-Server, the one holding the target shard, where it can be executed locally. The reading requests are load balanced across all the available ArangoDB Coordinators. The reading tasks can be executed in parallel in the Spark cluster and the resulting Spark DataFrame has the same number of partitions as the number of shards in the collection. When reading data from a collection, the reading job is split into many tasks, one for each shard in the source collection.

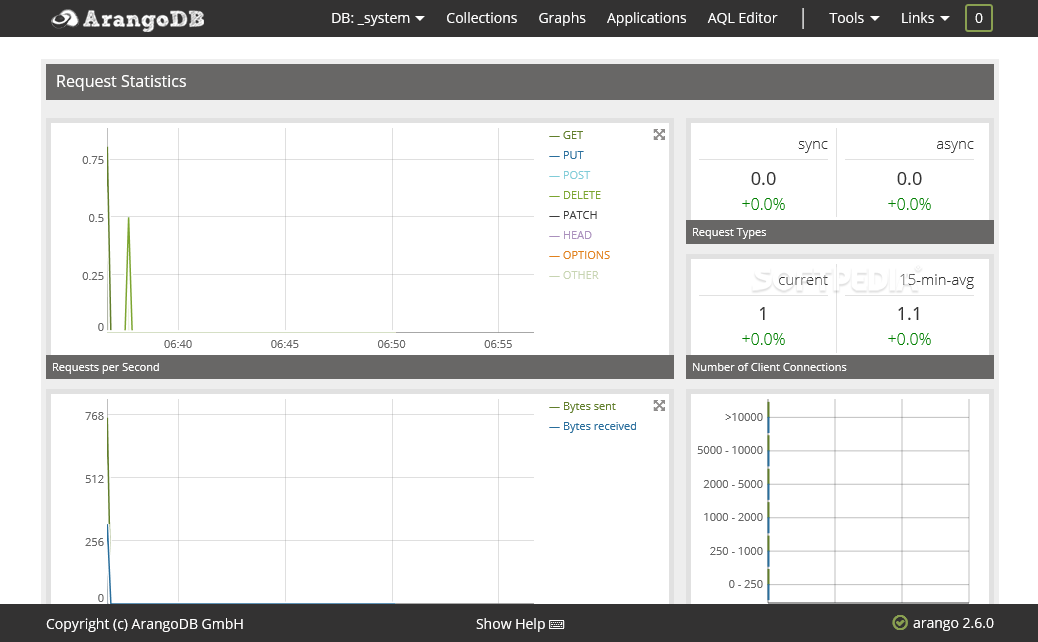

The connector can read data from an ArangoDB collection or from a user provided AQL query. While internally implemented in Scala, it can be used from any Spark high-level API language, namely: Java, Scala, Python and R. It works with all the non-EOLed ArangoDB versions. At the time of writing Spark 2.4 and 3.1 are supported. integration of ArangoDB in existing Spark workflowsĪrangoDB Datasource is available in different variants, each one compatible with different Spark versions.interactive data exploration with Spark (SQL) shell.uniform access to data from multiple datasources.ETL (Extract, Transform, Load) data processing.Source: PacktĪrangoDB Spark Datasource is an implementation of DataSource API V2 and enables reading and writing from and to ArangoDB in batch execution mode.

It exposes a pluggable DataSource API that can be implemented to allow interaction with external data providers in order to make the data accessible from Spark Dataframe and Spark SQL.

It is designed to process in parallel data that is too large or complex for traditional databases, providing high performances by optimizing query execution, caching data in-memory and controlling the data distribution. Nowadays, Apache Spark is one of the most popular analytics frameworks for large-scale data processing. We are proud to announce the general availability of ArangoDB Datasource for Apache Spark: a new generation Spark connector for ArangoDB.

0 kommentar(er)

0 kommentar(er)